The Road to Autonomous Networks: How Hybrid AI and Digital Twins are Revolutionizing Telecom

The vision of fully autonomous telecom networks, operating with minimal human intervention, is rapidly becoming a reality. This evolution is driven by the convergence of powerful technologies like Digital Twins and Artificial Intelligence. As a solution architect in the telecom software R&D space, I see firsthand how these innovations are poised to transform how CSPs manage and optimize their infrastructure.

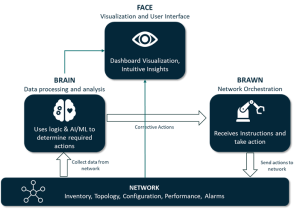

Digital Twin and Autonomous Networks: The Brain and the Body

At the heart of this transformation lies the Network Digital Twin (NDT). Imagine a highly detailed, virtual replica of a physical telecom network – a dynamic, living counterpart that is continuously updated with real-time data such as telemetry, configurations, alarms, traffic patterns, and sensor data. This “virtual mirror” allows for powerful analysis and simulation, acting as the data-driven, AI/ML-powered brain of the network.

Autonomous Networks (ANs) aim for telecommunications networks to operate with little to no human intervention, striving for a “zero-X” experience: zero-wait, zero-touch, and zero-trouble. Key characteristics of ANs include the ability to self-configure, self-optimize, self-heal, self-protect, all driven by intent and closed-loop automation.

Digital Twin: The Path to Autonomy

The Network Digital Twin (NDT) is more than just a tool; it’s the fundamental enabler and an integral component of Autonomous Networks. Functioning as the “brain” of autonomous operations, the NDT leverages logic and AI/ML to determine necessary actions through comprehensive data processing and analysis. It generates instructions and corrective actions for the “brawn” – the network orchestration layer. A continuous flow of data from the network (including inventory, topology, configuration, performance, and alarms) is collected and stored in a unified data lake. This continuous loop of monitoring, analysis, and corrective action, visualized through intuitive dashboards, forms the core of an autonomous system.

Blueprint for AI Autonomous Networks: Key Pillars

Achieving AI autonomous networks requires a robust architectural foundation. A blueprint architecture for AI autonomous networks highlights several key pillars:

- High Upfront Costs: Investing in on-premise data centers with the necessary GPUs and storage requires a huge initial investment.

- Operational Complexity: Ongoing management of these sophisticated systems demands specialized expertise, leading to increased overhead.

- Scalability Limitations: Expanding capacity on-premise is often slow and costly, restricting flexibility in adapting to evolving network demands.

- Fragmented Tools: The lack of a unified platform can slow down AI/ML development, as different tools and processes might be in use.

- Complex Pipelines: Setting up AIOps/MLOps on-premise often involves manual and inconsistent processes.

- Slower Innovation: On-prem deployments might lack the readily available pre-built tools and services offered by cloud platforms, potentially delaying the adoption of new technologies.

- Data Management Struggles: Handling large and diverse datasets on-premise can be cumbersome and inefficient.

- Distributed Management Difficulty: Managing distributed network components and their data becomes harder in an on-premise setup compared to centralized cloud environments.

Hybrid Network AI: The Optimal Solution

The solution isn’t simply an either/or choice between on-premise and cloud; it’s about combining their strengths. Hybrid Network AI offers a flexible and optimal solution for rApp development and deployment, mitigating the individual drawbacks of each approach and maximizing their benefits. This approach leverages the cloud for intensive tasks while keeping latency-sensitive operations closer to the network.

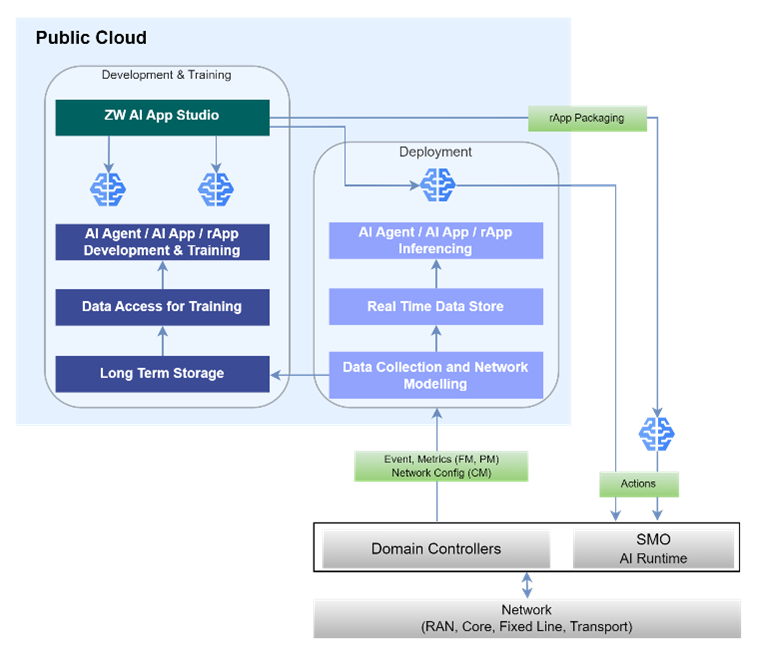

Implementing Hybrid AI with Google Cloud Platform (GCP)

A hybrid AI architecture with GCP enables a powerful combination of cloud-based development and training with network-edge inferencing.

- Development & Training (Cloud-based): A dedicated AI App Studio within GCP facilitates the visual design and development of AI models. Data access for training is provided through long-term storage, leveraging GCP’s robust data lake capabilities. This allows for training AI agents and apps using vast datasets in a scalable and cost-effective manner.

- Deployment & Inferencing (Hybrid): AI models trained in the cloud can be packaged and deployed to run in the network for latency-sensitive inferencing, leveraging platforms like O-RAN SMO. Simultaneously, AI models can also run on the cloud with access to broader datasets, providing a comprehensive view. Both on-premise and cloud-based models can propose actions to optimize the network in real-time. Real-time data stores and data collection/network modelling components ensure continuous feedback.

This hybrid model allows CSPs to harness the power of cloud-scale AI for model development and optimization, while ensuring low-latency decision-making at the network edge where it matters most.

Zinkworks Solutions: Analytics and AI/ML Applications

As a telecom software R&D specialist, our offerings at Zinkworks are designed to facilitate this transition to autonomous networks:

ETL Pipelines

Analytics Use Cases

Network AI Apps and Agents

AI App Studio

Integration with SMO

By combining these advanced software solutions with the strategic adoption of Hybrid Network AI, CSPs can effectively navigate the complexities of modern telecom environments, unlocking the full potential of autonomous networks. Contact us to learn more about our innovative solutions and collaborative opportunities.

Author

Jatin MarwahaChief Technology Officer Telecoms and OSS