Author

Jatin MarwahaChief Technology Officer Telecoms and OSS

"*" indicates required fields

Software development has always been a dynamic field, constantly evolving to meet the complex demands of an increasingly digital world. Software development is undergoing its most profound transformation yet, driven by the shift from explicit rule-based systems to intelligent, autonomous agents.

This blog aims to demystify this evolution, particularly focusing on Agentic AI from a Developer Lens. Readers can expect to journey through the historical shifts in how we build software, understand the core components and capabilities of AI agents, and gain insight into the changing developer blueprint, from coding rigid logic to orchestrating dynamic, intelligent systems. We will highlight the new skills required, the architectural considerations, and the unique challenges and opportunities that arise when embracing this autonomous future.

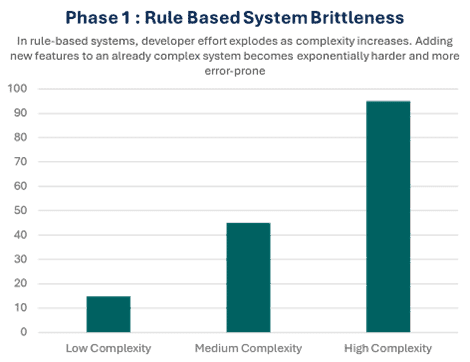

Before modern AI, software development was defined by Phase 1: The Era of Explicit Programming, and its rule-based systems.

Here, developers explicitly coded every piece of logic, every if-else path and state machine, anticipating all scenarios to hardcode exact responses. This made systems wonderfully predictable and deterministic, easy to control and debug for simpler applications. Many of us grew up building this way.

However, this approach quickly hit limits. As problems grew complex, the sheer number of rules led to “if-else hell,” making software brittle and hard to maintain. New requirements meant time-consuming manual code changes, and critically, the system simply couldn’t adapt to anything outside its predefined rules.

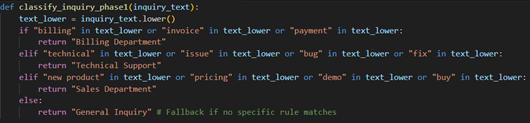

Let’s look at below code to classifying a customer inquiry to route it to the correct support department (e.g., ‘Sales’, ‘Support’, ‘Billing’)

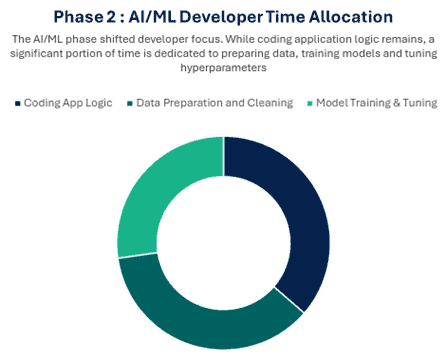

Following the limitations of purely rule-based systems, Phase 2: The AI/ML Catalyst emerged, fundamentally shifting how we built software.

Instead of coding every possible scenario, the focus moved to training models and neural networks. Developers started feeding large datasets to algorithms, allowing the software to learn patterns and make predictions or classifications. This meant a system could then behave “reasonably” even for situations it hadn’t been explicitly programmed for, thanks to its learned understanding.

This development paradigm replaced significant portions of the hard-coded logic from Phase 1, making systems more adaptive and capable of handling a broader spectrum of inputs without constant manual updates.

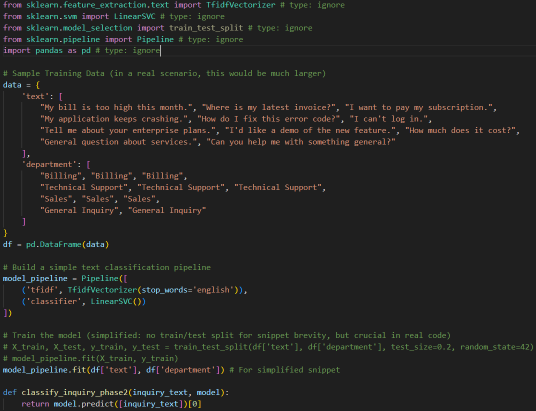

Now let’s look at code for same scenario using AI/ML models.

This journey accelerated dramatically with the advent of Large Language Models (LLMs), marking the beginning of Phase 3: The LLM Revolution.

The LLM Revolution (Phase 3) truly changed the game. From training neural networks, now we get to program powerful neural networks using natural language, tapping into a synthetic intelligence with a “gigantic memory” of vast learned knowledge and impressive reasoning abilities.

LLMs function much like a central “operating system”, acting as the brain for many intelligent applications. We no longer need to write detailed instructions or train a model, we just need to configure LLM, and it will do its magic.

Let’s look at code for same scenario using LLM.

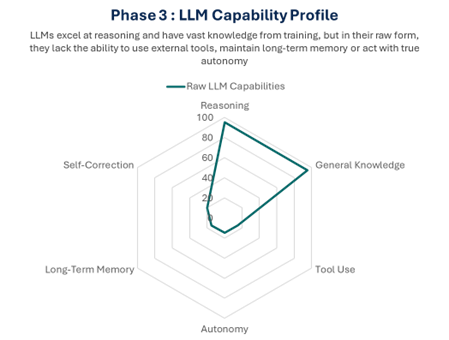

However, despite their immense capabilities, it’s crucial for developers to understand the nuances and inherent limitations of LLMs when building with them. While they excel at reasoning and generating content,

This stateless nature and context window constraint highlight that a raw LLM, by itself, isn’t truly autonomous; it lacks persistent memory, tool-use capability, and the ability to plan and self-correct across complex, multi-step tasks. This is where Agentic AI steps in.

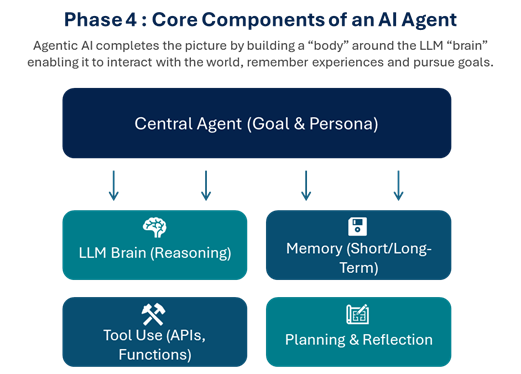

So, what exactly is an AI Agent for us, the developers? It’s much more than a simple LLM API call. An AI agent is an autonomous software system that can perceive its environment, reason through problems, make plans, and execute actions to achieve a specific goal.

If you think of the LLM as the brain – providing raw intelligence and language – the agent builds a complete “body” around it. This “body” equips the LLM with the capabilities it needs to actually do things.

From a developer’s perspective, this “body” is built from several key components. First, there’s Memory: not just the LLM’s vast trained knowledge but dedicated short-term memory (like a context window for current tasks) and long-term memory, often implemented with vector databases or knowledge graphs for persistent recall. Crucially, agents gain the ability for Tool Use (or Function Calling). These “limbs” allow the agent to interact with the real world by calling APIs, querying databases, searching the web, or running custom code.

Finally, agents feature Planning & Task Decomposition to break down complex goals into manageable steps, and Reflection / Self-Correction, enabling them to evaluate their own progress, identify errors, and adjust their strategy.

This complete package allows agents to be proactive and goal-oriented, moving far beyond reactive LLM responses to truly autonomous problem-solving.

As we transition from understanding what an AI agent is, the critical question for developers becomes: how do we actually build these systems?

The blueprint for software development is undergoing a fundamental change, moving us from merely “coding logic” to “orchestrating intelligence.” This means less time writing explicit if-else trees for every scenario and more time defining an agent’s persona, overall goals, the tools it can use, and robust feedback loops. Our primary task shifts to designing effective prompts that guide an agent’s behaviour and reasoning, rather than dictating every single step of its execution.

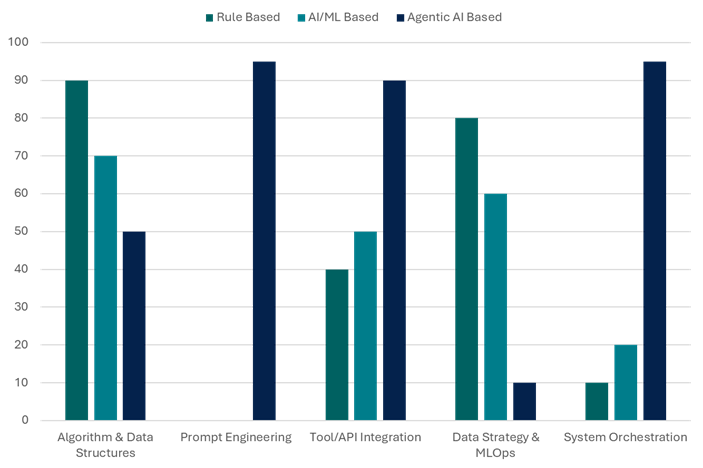

This new paradigm demands an evolving skill set for developers. While traditional programming fundamentals remain essential, specialized areas are now critical.

Beyond direct implementation, developers must also master Memory Management, designing how agents store and retrieve persistent knowledge using vector databases or knowledge graphs. Monitoring & Observability are more crucial than ever for debugging non-deterministic agent interactions and tracking performance. In practice, this often means building clear, intuitive visualisations of system behaviour, for example, using tools like polygons in React to represent spatial data, boundaries, or network states in real timeWe also need new strategies for Evaluation & Testing to ensure these autonomous systems behave as expected in various scenarios.

Finally, a robust Data Strategy is essential, as data informs not only agent behaviour but also its continuous learning and adaptation. Embracing this new blueprint means becoming architects of adaptive, intelligent systems rather than just coders of static instructions.

We’ve journeyed through the evolution of software development, from the explicit, rule-bound world, through the model-training era, and into the natural language programming of LLMs. It’s clear that Agentic AI isn’t just another feature; it represents a fundamental shift in how we build software. We are moving beyond systems that simply predict or respond, towards truly autonomous entities capable of reasoning and self-correction. This new paradigm empowers us to tackle problems of unprecedented complexity, automating workflows and creating adaptive solutions that were once confined to science fiction.

For developers, this marks a profound transformation. Our role is evolving from meticulously coding every logical path to becoming architects and orchestrators of intelligent, self-directed systems. This means honing new skills in advanced prompt engineering, robust tool integration, memory management, and leveraging powerful orchestration frameworks.

While agents for rapid innovation are undeniable, we must also approach this future with a critical eye, actively addressing challenges like non-determinism, cost management, and, most importantly, building robust safety and ethical guardrails around these powerful, yet fallible, systems.

The era of agentic AI is not just a technological leap; it’s a call for a new developer mindset. It invites us to think more strategically about defining goals and constraints, rather than micro-managing every execution detail. The future of software development will be defined by our ability to design and collaborate with these autonomous intelligences. So, embrace the challenge: start experimenting with agentic frameworks, deepen your understanding of these new paradigms, and prepare to shape a future where software doesn’t just execute instructions, but intelligently navigates and acts within the world.